Modular Design in Open Compute Project¶

Introduction¶

As a follow up to my previous article on HP Moonshot’s modular design, this one’s a study of modularity in the Open Compute Project [OCP].

The HP Moonshot uses modularity at an appliance level, i.e., the chassis is a complete operational unit in itself. You don’t need anything else to power and run it. The OCP approach is a little different – they exploit modularity at a Rack level.

One of the fundamental charters of OCP was to create and open source designs and specifications for the 4 building blocks of a data center – Networking, Compute, Storage, and Rack & Power – and they have done just that over the last 7 years. Some of the projects within OCP associated with these components are:

- Networking - Wedge 100

- Compute - Yosemite V2, Tioga Pass

- Storage - Bryce Canyon, Lightning

- Rack & Power - Open Rack V2

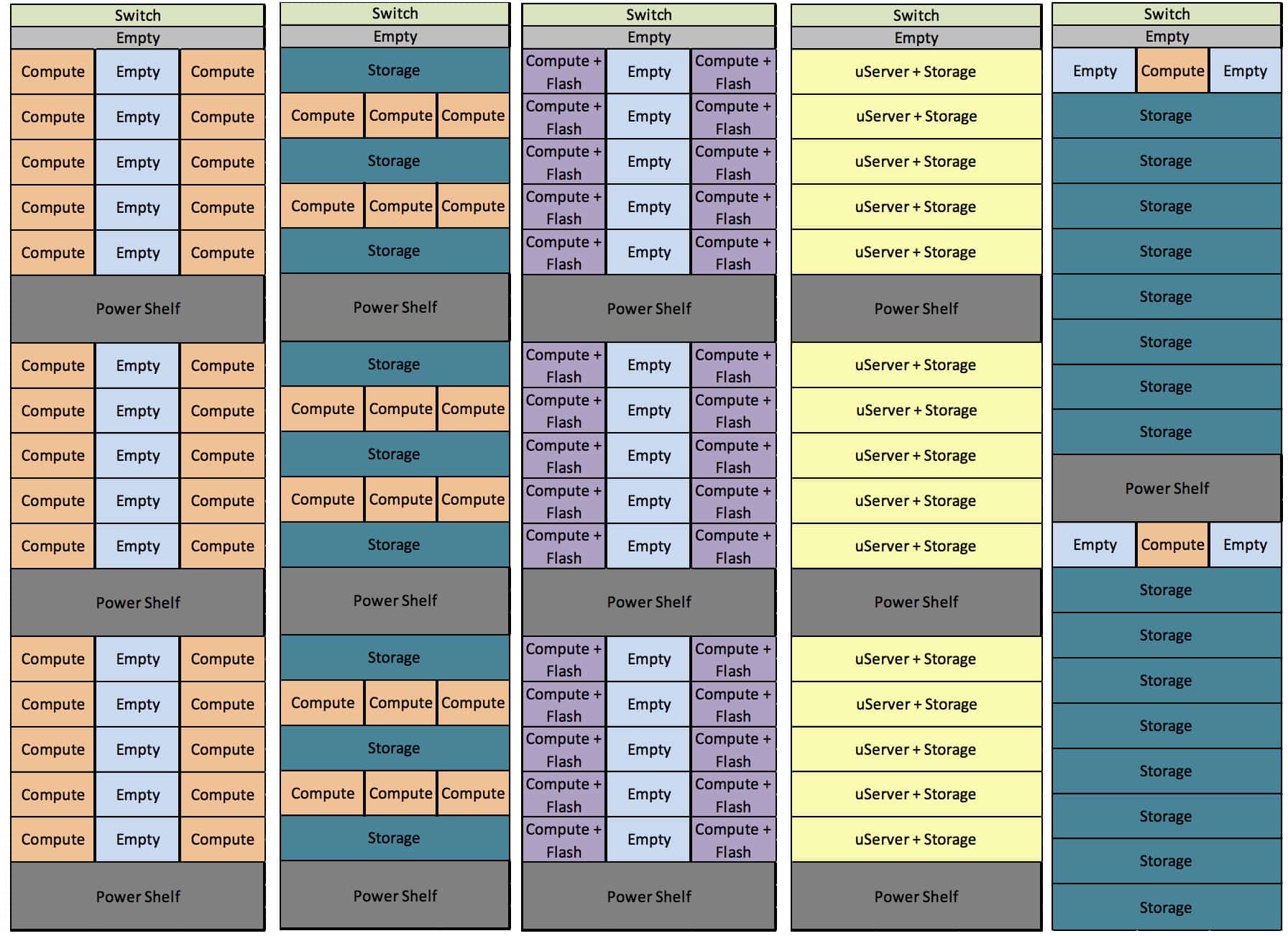

The Open Rack is then configured with a right balance of these building blocks based on the application it is needed for.

The following image shows five such configurations. The common factors between them are the Rack itself, the Top-of-rack Switch and the Power Shelf. But, notice how the balance of Compute versus Storage changes based on the application.

Power Shelf¶

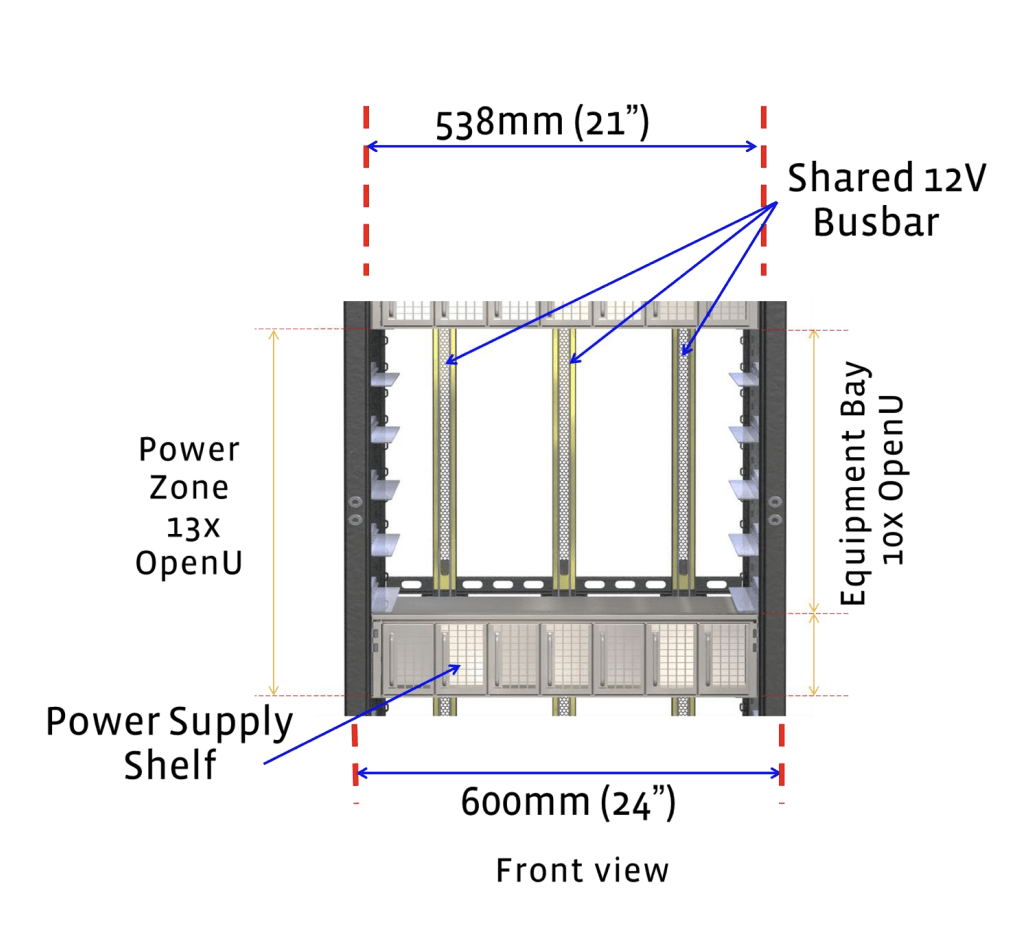

The OCP appliances (i.e., Compute Servers, Storage Servers or JBODs, Graphics Units or JBOGs) do not have a power supply of their own. Instead, a unit called Power Shelf powers a portion of the rack. The Open Rack V1 has 3 power shelves each that can provide 4500W, whereas, Open Rack V2 has 2 power shelves with a capacity of 6600W. The total capacity being around ~13kW in both cases.

Channels called “Busbars” run vertically from the power shelves and the appliances plug into it them at the back.

Yosemite V2¶

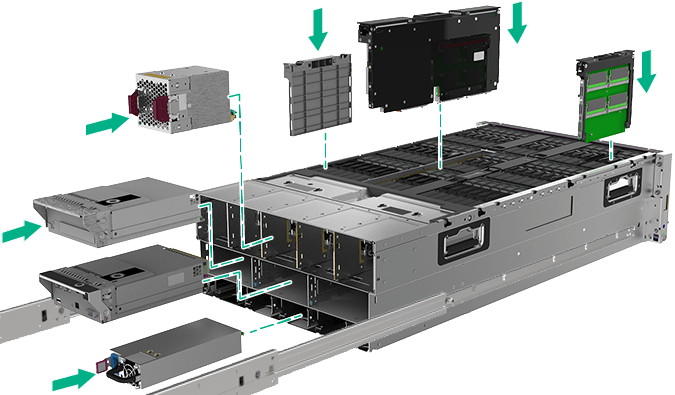

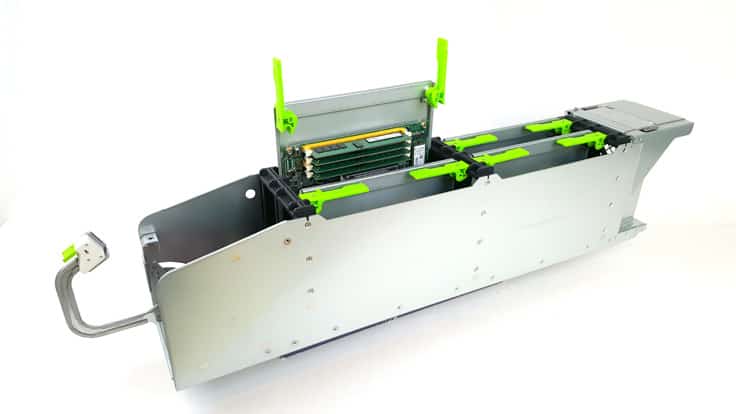

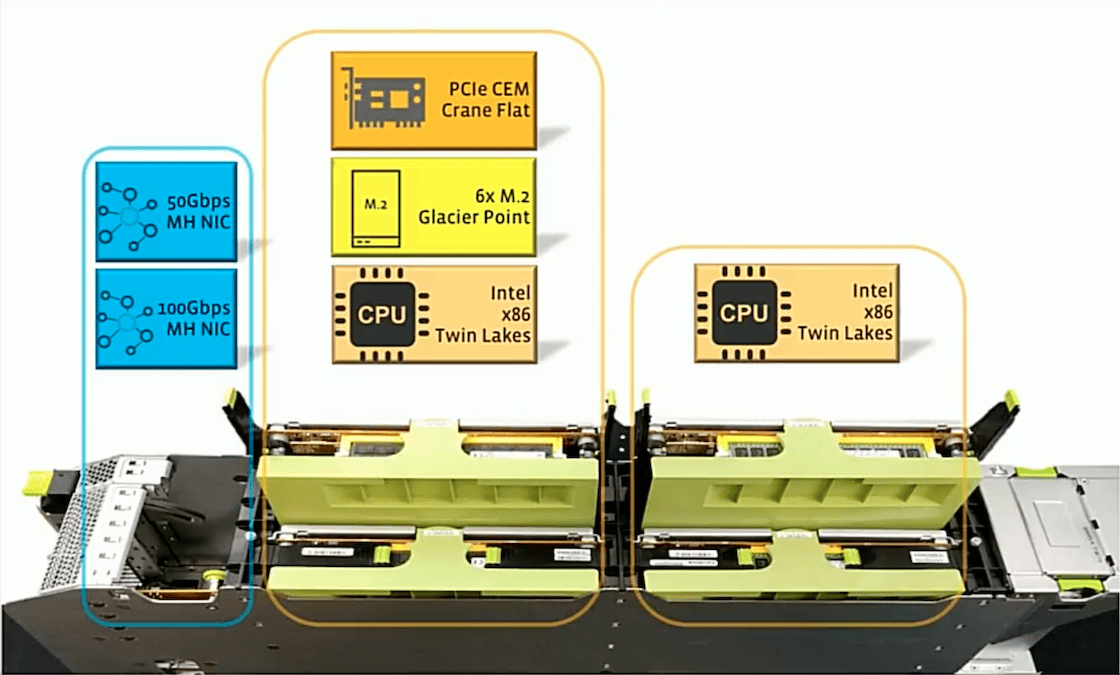

While Bryce Canyon (storage server), Big Basin (GPU server) and Tioga Pass (hi-performance compute server) chassis are all modular to some extent – I find the design of their 1 socket server chassis, Yosemite, most interesting. In an earlier article, I dissected the design of Yosemite V1. The biggest drawback of the V1 chassis was that the cards were not hot-swappable. This has been fixed in Yosemite V2.

The new design makes the following 2 changes

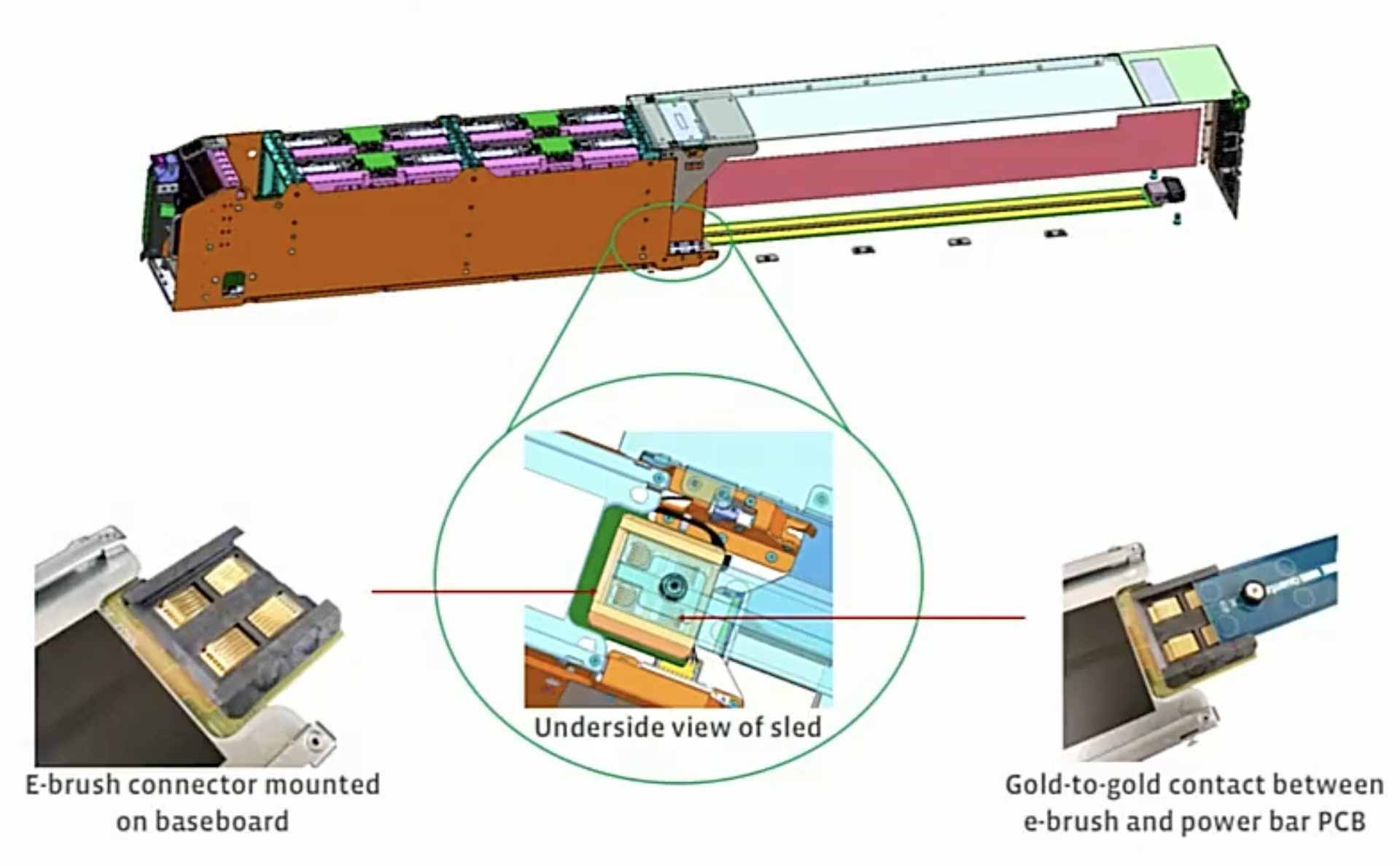

- The orientation of the sled is changed by 90 degrees. So, now you would be inserting the cards from the top instead of the side.

- There’s an E-brush and power rail at the bottom of the sled. So when it is pulled out for servicing, the cards inside the sled are still powered.

Project Olympus¶

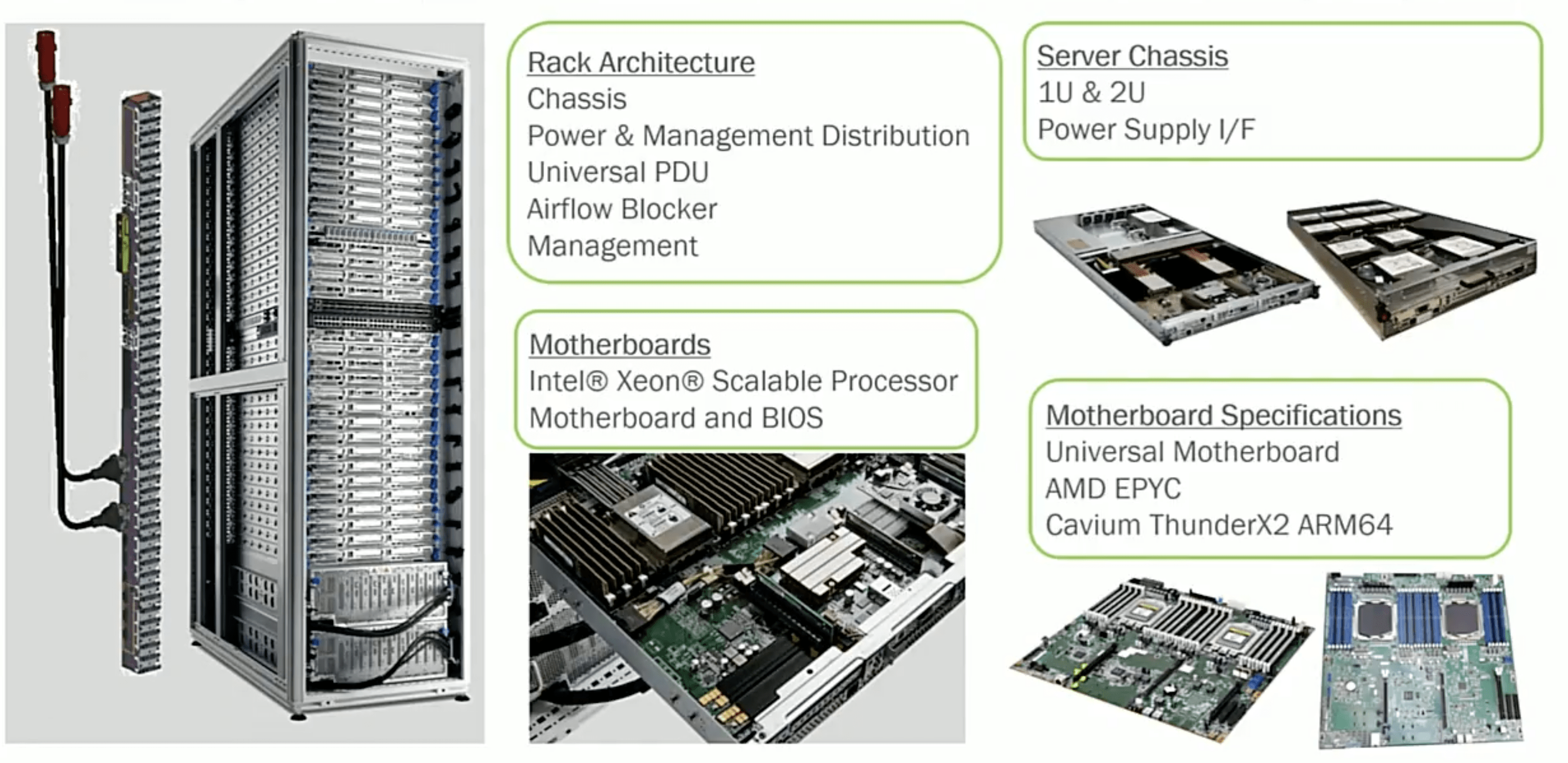

These are exciting times for the OCP community. Microsoft, for their Azure cloud, designed and built all the components (rack, power, compute, storage, etc) from scratch and about a year ago they donated all their designs and specifications to OCP. They call it Project Olympus.

Project Olympus is also modular at the Rack level but they made one different design choice. They decided to stick with the standard 1U 2-socket server design. They do make a few SKUs of the server motherboard to accommodate Intel, AMD and Cavium ThunderX2 (ARM) processor.

Closing Thoughts¶

The pace of innovation at The Open Compute Project has truly been astounding. As a community, they are standardizing on different types of building blocks, open sourcing the designs, partnering with manufacturers and effectively deploying this equipment.

In my opinion, the OCP community is essentially made up of three groups of people:

- Facebook, Microsoft, Intel, Google and the like – Industry giants who are big contributors to OCP and consumers of the stuff that is made by OCP

- Quanta, Wiwynn, and others – OEM manufacturers and solution providers, i.e., folks who know how to bring these designs to life and can do it at scale

- Community organizers and the folks running the show behind the curtains at OCP

Since the folks who are contributing heavily to OCP are also the biggest consumers of the equipment, the OCP ecosystem is a positively-reinforcing-feedback-loop. This provides all the parties involved maximum motivation to innovate at a blistering pace and that’s just what they are doing.

References¶

The images used in this article are from one the following 3 sources1

- circleb.eu - OCP gear overview

- OCP summit presentations

- Hand drawn using Linea app on iPad

Other references

- Facebook press release - The end-to-end refresh of our server hardware fleet

- circleb.eu - OCP gear overview

-

Disclosure - No permissions were taken to use the images. But all sources have been properly credited right below the image and in the references section. ↩